As vendors try to showcase their products, there is often confusion regarding the difference between two similar products, such as PanFS from Panasas, Inc. and Lustre (from Sun, then Oracle, then Whamcloud, then Intel). But in this particular case, the difference is not that big — you will see for yourself.

Panasas was founded in 1999 by Dr. Garth A. Gibson and Dr. William Courtright. Garth A. Gibson is a co-inventor of RAID technology — no wonder that his company excels in storage products. Panasas provides appliances — hardware and software bundled together and fine-tuned for high performance. This has always been a “closed-source”, proprietary product, which translates into a vendor lock-in.

Lustre experienced a tumultuous history of corporate mergers and acquisitions. Finally, source code was published under the GNU GPL license, but only the chosen few would dare to look inside. Everyone else is happy with pre-patched Linux kernels. Most development efforts seem to be done by Whamcloud, because they have the necessary expertise. Recently, Whamcloud was acquired by Intel. Theoretically, Lustre is still open source, so anyone can contribute, but for practical purposes, you still need to ask a single company (formerly Whamcloud, and now Intel) if you want to have new features added.

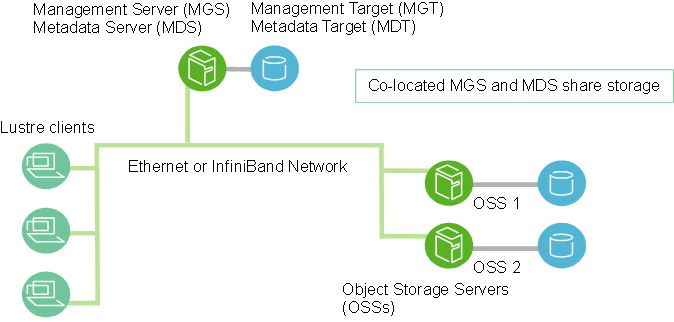

Similarity between the Panasas solution and Lustre is best represented by this figure taken from the Lustre manual:

To put it simply, when a client (on the left) wants to read a file, it sends the file name to a metadata server (green circle on the top). The metadata server responds with the location of the parts of that file on several storage servers (two green circles on the right). Then the client can query storage servers directly via a high-performance network and retrieve file parts. The idea is both simple and brilliant (and it’s called object-based storage).

So, that’s how Lustre works. But if we look at Panasas® ActiveStor™ appliance, it is not so different. Their director blades correspond to metadata servers in Lustre, and storage blades correspond to object storage servers. One shelf of ActiveStor (4U height) contains 11 blades, of them 1, 2 or 3 are director blades, and the rest are storage blades (in additional expansion shelves, all 11 blades are storage blades). It is still the old good object storage architecture, simply it is slightly disguised by the physical packaging.

Panasas ActiveStor has more features, such as per-file RAID, or the ability to use many director blades simultaneously. For comparison, Lustre currently only supports one metadata server (MDS) per filesystem, or two MDSs in an Active/Passive failover configuration. On the other side, Lustre is known to provide superior performance, up to 1,3 terabytes per second with the IBM Sequoia supercomputer — no Panasas-based storage solution of comparable scale was ever built.

Additionally, Lustre can use any hardware of your choice for servers and storage devices. This can be a benefit if you want more flexibility, but it can turn against you if you need to debug your custom configuration — a problem that you won’t have with Panasas. The choice is yours!

Nice view from 100,000 feet!

Both Panasas PanFS and Lustre are both global parallel file systems that take the file server out of the data path, providing great performance scalability for traditional HPC workloads. But that’s pretty much where the comparison stops.

Panasas PanFS has: ‘working’ user quotas, snapshots, active/active metadata failover, NFS failover, multi-protocol access (Panasas DirectFlow, parallel NFS, clustered NFS & clustered CIFS), superior file locking, online capacity & performance expansion – I could go on………..and I will: end to end data integrity, automatic capacity balancing, Tiered Parity with protection against media errors, parallel reconstructions and scalable metadata.

Basically, if you want a reliable, high productivity solution then choose Panasas. If you are happy to employ dedicated personnel to keep your system running and don’t mind a lot of downtime, choose Lustre.

Don’t get me wrong, Lustre has it’s place in the huge national supercomputing sites that employ armies, with taxpayers money, to keep it running. But, if you buy HPC to give your organisation a competitive edge then you need the best!

A remark on per-file RAID. The FAST ’08 conference, run by USENIX, had a paper by B.Welch et al., entitled “Scalable Performance of the Panasas Parallel File System”. Here is an excerpt:

“The fourth advantage of per-file RAID is that unrecoverable faults can be constrained to individual files. The most commonly encountered double-failure scenario with RAID-5 is an unrecoverable read error (i.e., grown media defect) during the reconstruction of a failed storage device. […] With per-file RAID-5, this sort of double failure means that only a single file is lost, and the specific file can be easily identified and reported to the administrator.”

A neat feature which, I suppose, Lustre is lacking, because it relies on old-fashioned block-based RAID. (The paper also discusses other unique features of Panasas solution).

Another USENIX conference, HotStorage ’10, presents a paper boldly named Block-level RAID Is Dead. It introduces Loris, a new storage stack.

Hi, Derek, and thanks for shedding some light on Panasas products.

Indeed, Lustre is not easy to install. I heard from a colleague that the installation of Panasas ActiveStor just boils down to loading a Linux kernel module, and then it just works. Is it really so simple?

Now, regarding some of the features that you mentioned.

> active/active metadata failover

The question that I have here is: if I have 3 director blades in a shelf, is the load spread evenly between them? In other words, if I add more director blades to my system, will it raise metadata handling performance?

The next question is, can I add even more director blades to the expansion shelves, to have, say, 4 or 5 director blades in a big system with a single name space? Needless to say, the Panasas solution is already more scalable with respect to metadata handling than the current Lustre solution, and I am just curious how scalable your solution really is.

I guess the answer is “Yes” to all of the above questions, so I would ask one more question about capacity expansion. When new storage devices are added to a Lustre system, existing files become unevenly striped among storage devices. Currently, Lustre manual recommends to deal with it by creating a copy of a big file, and then renaming it back to the original name. During a copy creation, newly added storage devices become utilized. This could be automated, but it is not.

Therefore, the question is how Panasas ActiveStor would handle this situation when adding new expansion storage shelves to the system: would it silently start automatically redistributing files so that they occupy newly added storage devices, or is there a command or a GUI button that would start that process? I guess that’s what you called “automatic capacity balancing” in your comment, but I would just like to be sure.

Finally, I found out that Lustre allows to specify striping on a per-file and per-directory basis. I guess this must be similar to per-file RAID of Panasas ActiveStor (correct me if I am wrong). But, again, it has to be done manually via command line. I suppose Panasas has got a GUI for that.

Konstantin,

may I contribute some answers? I have some experience with Panasas (for some 3 years) and may be able to explain a bit how the system works.

> The question that I have here is: if I have 3 director blades in a shelf, is the > load spread evenly between them? In other words, if I add more director

> blades to my system, will it raise metadata handling performance?

Yes, the load is spread evenly and metadata performance can be enhanced by adding more director blades. Also, your (non-parallel) NFS and CIFS performance will rise, as director blades also work as NFS/CIFS gateways.

> The next question is, can I add even more director blades to the expansion

> shelves, to have, say, 4 or 5 director blades in a big system with a single

> name space? Needless to say, the Panasas solution is already more scalable

> with respect to metadata handling than the current Lustre solution, and I

> am just curious how scalable your solution really is.

Yes, you can, Choose an odd number of director blades (such as 5 or 7) to be able to always meet the quorum if director blades should fail.

> I guess the answer is “Yes” to all of the above questions, so I would ask one

> more question about capacity expansion. When new storage devices are

> added to a Lustre system, existing files become unevenly striped among

> storage devices. Currently, Lustre manual recommends to deal with it by

> creating a copy of a big file, and then renaming it back to the original name.

> During a copy creation, newly added storage devices become utilized. This

> could be automated, but it is not.

> Therefore, the question is how Panasas ActiveStor would handle this

> situation when adding new expansion storage shelves to the system: would

> it silently start automatically redistributing files so that they occupy newly

> added storage devices, or is there a command or a GUI button that would

> start that process? I guess that’s what you called “automatic capacity

> balancing” in your comment, but I would just like to be sure.

Yes, that is meant by automatic capacity balancing. When you add more storage and the new director blades are online, the system starts to slowly migrate objects automatically, until objects are evenly distributed among the storage blades. This can be configured (turned off) but is on by default, I think.

> Finally, I found out that Lustre allows to specify striping on a per-file and

> per-directory basis. I guess this must be similar to per-file RAID of Panasas

> ActiveStor (correct me if I am wrong). But, again, it has to be done manually

> via command line. I suppose Panasas has got a GUI for that.

A file is automatically mirrored, if (and as long as) it is smaller than 16k. If it becomes larger, it will be striped according to a RAID 5 algorithm and parity for each object is generated (by the client). In addition with the end-to-end data integrity checking and the vertical parity it basically excludes the possibility of sending corrupt data to the client.

The per-file behaviour is also configurable. You could use a RAID 1 for each file, so that large files are also mirrored, but that would cost you a lot of disk space.

A RAID 6 implementation is under development, I think.

Hope this helps,

Michael

Hi Michael,

Thanks for your input! I enjoyed your company during the ISC’12 supercomputing conference, and I appreciate you devoted your time to answer the questions about Panasas.

With the fast advent of pNFS, it seems, however, that the future of both Panasas and Lustre is going to change!

Konstantin.