Science and engineering both rely on the continuous increase in supercomputing performance. Back in 2009, it was believed that exascale machines will become available by 2018 — nine years ahead seemed like a lot of time. No one knew how much power exascale systems would require, but the seminal 2011 report by 65 authors (Jack Dongarra et al., “The International Exascale Software Project roadmap”, PDF) wrote this: “A politico-economic pain threshold of 25 megawatts has been suggested (by the DARPA) as a working boundary”.

Somehow it happened that people started to perceive 25 megawatts as a goal that is feasible to achieve, while in fact it was only “a pain threshold” — a figure that is still acceptable, but anything bigger than that would cause “mental pain” to stakeholders.

Now in 2014 it seems that both goals — time frame (2018) and expected power consumption (25 MW) — will be missed. Analysts are carefully speaking about year 2020, and discussions of power consumption are “omitted for clarity”, so to say, because any serious discussion would quickly reveal how badly the power goal is going to be missed. Of course, power consumption makes a significant contribution to the operating cost of a supercomputer, but the biggest obstacle on the way to exascale is the capital (procurement) cost, and it is not being discussed at all.

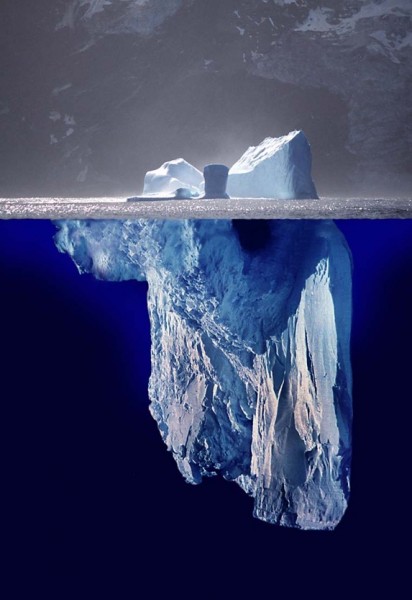

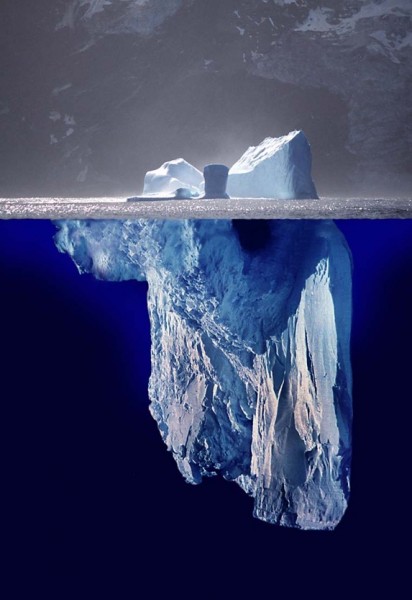

When discussing economics of the exascale era, people often talk about power consumption and associated costs, but miss that they represent less than 1/3 of the total cost of ownership — “the tip of the iceberg”. Image by Uwe Kils (iceberg) and Wiska Bodo (sky). Source: Wikimedia Commons.

Our community is like Titanic: it is swiftly moving in the dark, allegedly towards its exascale goal, passengers on the saloon deck — the scientists — are chatting about how nice it would be to have an exascale machine to solve many of the world’s pressing problems, and how they will ask for funds to keep their countries competitive, but only some of them look out of the windows and note that we have an obstacle ahead, and then don’t speak out so as not to ruin the delight. Continue reading →