There are a lot of good research projects going on in Europe: if you didn’t hear about them, it is simply because they are not receiving the media attention they deserve. One of such projects is the Fraunhofer parallel file system, which is being developed by the Institute for Industrial Mathematics, part of the Fraunhofer Society, since 2005.

There have been several major installations of FhGFS during the last years (and more are coming), but this year, 2013, appears to be a definitive point for the project, characterised by a substantial increase in download counts. Latest presentation at SC13 in the USA also attracted attention (you can fetch the slides at the project’s website, FhGFS.com).

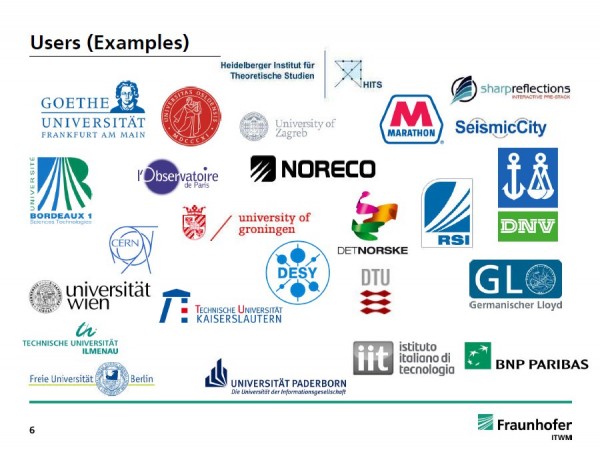

Users of FhGFS. Source: presentation made at SC13.

To learn what makes FhGFS so special, I decided to contact Sven Breuner, team lead for the FhGFS project. The conversation quickly became “an interview by e-mail”, which I present to you below. Continue reading