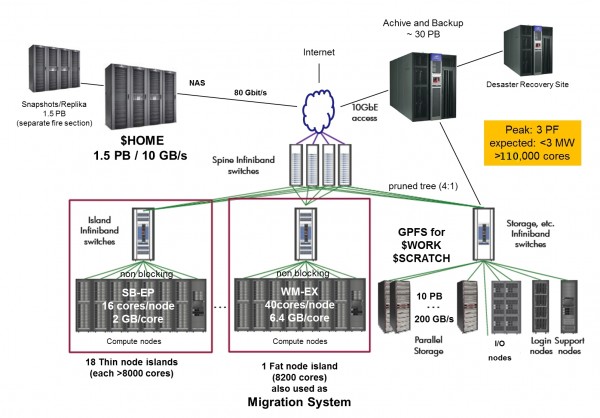

SuperMUC is a cluster supercomputer currently being deployed at Leibniz Supercomputing Centre (LRZ) in Germany. As of today, 8,200 cores of 110,000 have been put into operation — it is a so-called migration system for early user access. (UPD: Deployment was complete in July 2012). SuperMUC consists of almost 9500 compute nodes and has a peak performance of circa 3 petaflops.

The machine was designed by IBM. They chose to build a 4:1 blocking fat-tree network. Yours truly compared their solution with two other possible alternatives using the fat-tree design tool described on this site.

(Image copyright by LRZ, taken from this page)

To put it simply, IBM decided to create 18 islands of “thin nodes” (16 cores per node), one “fat node” island (40 cores per node), and one “scratch storage” island (20 islands total). Each island connects in a non-blocking mode to a large 648-port InfiniBand switch. Hence, there are 20 edge switches. Those edge switches, in turn, connect to the core level, made of four 648-port switches. (I have to admit that those 648-port switches are my guess, because no more detailed info is available. I assume a switch similar to IS5600 model from Mellanox. It has 648 ports and requires 31U of rack space — 29U for the switch itself and 2U for the shelf, as it weights 327 kg in its full configuration).

Let’s take a closer look at a thin island. It has 512 servers, each connected to an edge switch. 1/4 of that — 128 ports — go from edge switch to the core level. Therefore, we have a 4:1 blocking ratio for this two-level network.

In the current set-up, compute nodes within each island can communicate in a non-blocking fashion. You can schedule a job that uses all 8192 cores on one of the thin islands. But what happens if you have two thin islands, each of them half used? Then you still have 8192 cores, but now they are dispersed between two islands. And islands can only communicate with a 4:1 blocking. In general, with blocking set-ups, for compute jobs that span multiple islands communication is hindered.

Instead, IBM could make a non-blocking network. Let’s see how that would look like. To get that answer fast, I used the fat-tree design tool described on this site. I inserted a fictitious 648-port switch into the database, and ran the tool in a special debug mode which outputted all possible network configurations rather than only the most cost-effective.

The diagram above indicated that the “fat node” island contains only 205 nodes; besides, the structure of the “scratch storage” island is also unclear. Therefore, I designed the network as if it had 20 identical islands of 512 servers each — that is, 10,240 compute nodes.

First, I reproduces IBM’s result by requesting a 4:1 blocking network. The tool responded with 20 edge and 4 core switches — that is, 24 switches total. Cables between edge and core layers run in bundles of 32 — this is convenient.

Then I tried a non-blocking network. This time, the tool reported 32 edge and 16 core switches. In total, this is 48 switches, or twice more switch hardware than in the 4:1 blocking scenario. Cables run in bundles of 20. My “engineering evaluation” shows that using a non-blocking network would raise the total cost of SuperMUC by approximately 15-20%, but this way compute jobs bigger than 8192 cores could be run without concerns for blocking.

I also considered a third alternative, and you will easily see why IBM didn’t choose it: build the edge layer using ordinary 36-port switches, but still utilize 648-port switches on the core level. If we want a non-blocking network, then, as per the tool, the configuration requires 569 edge 36-port switches and 18 core 648-port switches. In terms of cost, this network, still being conveniently non-blocking, is only about 10% more expensive than the current SuperMUC’s network by IBM — not twice more expensive as the non-blocking alternative built entirely with 648-port switches.

Why didn’t IBM choose it? There are two reasons. The first is the overall volume of hardware that needs to be managed. It is easier to manage 24 or 48 large switches than almost 600 individual hardware items.

The second reason, as much as compelling, is cabling. In this scenario, cables between edge and core layers run singly. Every edge switch would have 18 separate cables to each of the core switches. These cables would also form “bundles” when they exit racks and proceed to overhead cable trays, but it is not the same as a “real” bundle of 20 or 32 cables that jointly go from one switch to another and never diverge on their way. And don’t forget about 12x InfiniBand cables that integrate three 4x links. This way, a bundle of 20 or 32 links would consist of only 7 or 11 physical cables — this trick cannot be applied to the scenario with 36-port edge switches.

In this post we have reviewed three alternatives of fat-tree network for the SuperMUC cluster computer. The non-blocking network requires twice more hardware than a 4:1 blocking variant, and is likely to raise the total cost of the cluster by 15-20%. Using 36-port switches for edge level is a poor choice.