Sometimes I visit big vendors’ presentations where they talk about their new servers. The audience — systems administrators, engineers, technicians — are listening very carefully, trying to grasp every word. They like to hear that the new server, equipped with the new CPUs, can achieve 20% or 40% bigger performance, “at the same price tag”.

Come on, guys. Don’t strain your ears. Isn’t it time for something new?

The 10 theses:

1. X86 architecture tried to remain backward compatible as it evolved during the last 34 years.

2. As a result, software manufacturers didn’t have an incentive to make their software parallel. They continuously coded serial versions of their applications, hoping that the new X86 CPU will just be faster than the previous.

3. Now most opportunities to make a single core run faster are exhausted, and the only way to improve computer performance is to add more cores. But the software industry (including compilers and languages segment) missed this “point of no return”. The framework for successful (easy) parallel programming is still in its infancy. Programmers are still not ready to think in terms of parallelism.

4. Future CPUs will most likely have lots of cores, but the cores will be simpler than the current ones. Some features, like out-of-order execution, are already being dropped (see Intel Atom, for example). Other features, such as hardware-assisted encryption, may migrate to the future CPUs.

5. The revolution has to start from software. Programmers must start “thinking parallel”. Compilers and toolchains must become mature enough to allow routine recompilation of existing software for different architectures.

6. Executable files should be able to run on multiple architectures (already true for ARM development) and be able to exploit whatever capabilities the CPU has (if a certain accelerator is present, it must be utilized).

7. The server segment is very conservative, while in the mobile world architectures come and go. The mobile segment may therefore become the driver of this innovation.

8. When recompiling applications for the new architecture becomes so widely used, it will not be a problem to drop the old X86 architecture in favour of something new and exciting.

9. For commercial companies, development and support of multiple competing architectures is prohibitively expensive. The history has seen this many times. New economic models are necessary. Perhaps, making the architecture open-source, such as with OpenSPARC?

10. If the HPC community clearly specifies what features the new architecture and microarchitecture should include, the resulting CPUs, produced at mass scales for server and desktop markets, will be also usable for HPC clusters.

What is the big problem with the X86 architecture? In short, it is 34 years old. It has been following the path of “evolution”, rather than “revolution”, during those 34 years. As technology improved, Intel tried hard to make X86 better. But they always tried to maintain backward compatibility.

They added support for more memory, integrated FPU and caches onto the chip, made the CPU superscalar, added new instructions (MMX, SSE), out-of-order execution, introduced hyperthreading followed by multicore designs, put memory controller on the chip, added new vector instructions (AVX), then on-die GPU, and so on, and so forth. As a result, a modern X86 CPU has nothing similar with its ancient ancestor. It just behaves in the same way, but internally it is very different.

In this situation, when backward compatibility was guaranteed, what did the software industry do? Nothing! During all 34 years, the application software vendors did nothing. Well, almost nothing.

You are likely to get advantage of multicore processing on your desktop if you are doing some serious audio or video editing. Web browsing can only benefit from multicore if you have a proper browser (Mozilla Firefox still can’t do that, which really upsets 4-core owners). What about multicore support in your word processor? That sounds like a joke.

Transistors are shrinking as technology improves, so Intel tried its best to make use of the newly available chip floorspace with each new technological step forward. When clock frequency couldn’t be pumped any more, adding more cores seemed a viable alternative. The remaining floor space is given up to cache memory. But this cannot go on this way: adding more cores is just useless when existing software cannot utilize more than one core.

How did it happen that we ended up in such a desperate situation? I believe the answer is the backward compatibility.

Software vendors, like a spoiled child, were joyfully watching how Intel and others tried to deliver performance according to the most optimistic expectations through all these years.

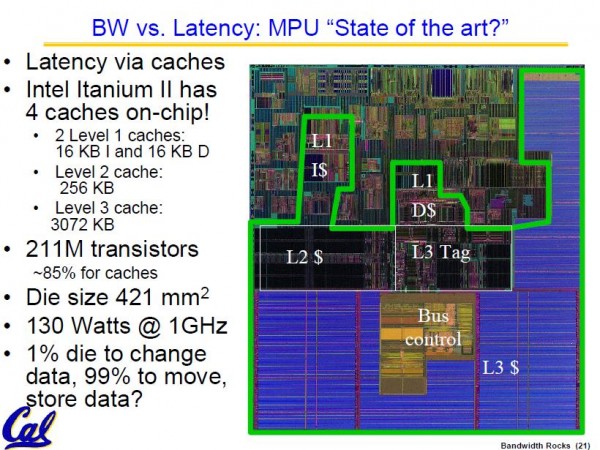

Memory accesses are expensive — the HPC folk knows this well — so CPU vendors made big on-die caches to minimize the number of memory accesses. Currently, CPUs look really ugly from a bird’s-eye view: lots of chip estate is occupied by caches.

Take a look at the extreme example on this slide from the presentation of Dr. David Patterson, “Why Latency Lags Bandwidth, and What it Means to Computing”:

All that stuff inside the green border are cache memories. They did it just to minimize the number of cache misses. Otherwise, the software would run slowly, not delivering performance that the public expects. (Pictured here is presumably Itanium McKinley, which was a single core CPU)

So, we do have multicore CPUs in our desktop computers, it is simply the ordinary software we use everyday that is unable to take advantage of these cores. While more and more parallelism was added to CPUs during the recent years, software manufacturers just hoped someone will solve all the problems for them by making the next generation CPUs yet faster than the previous.

Additionally, they were encouraged by the guaranteed backward compatibility. If they new that the next generation CPUs would anyway require a complete recompilation of all libraries and applications, they would not be so conservative. They would not rest on their laurels, they would need to make their software suitable for the upcoming hardware. And that would include, in the first place, ability to exploit multiple cores.

The compiler industry would receive a significant boost, because compilers able to help with parallelisation would become essential to port the existing software onto new hardware. The market economy failed to create new compilers, because there was no demand from software manufacturers.

Instead of the current monstrous CPUs, we would see designs with smaller, leaner, simpler cores. And we would finally be able to get rid of this outdated X86 architecture.

But wait a little. Didn’t I miss something? Didn’t Intel (and others) try to introduce alternative designs, departing from the X86 architecture, and begging OS, system and application software vendors to rewrite or recompile their software to benefit from the new designs? Of course they did! The Wikipedia article for X86 lists several such attempts, including Intel i860, Intel i960 and Itanium, the latter is the joint development between Hewlett-Packard and Intel.

So, why didn’t these new promising designs take root (with Itanium being sort of exception, but also falling out of favour)? The answer is not lying in the field of technology. The answer is the market economy.

It’s so hard for the software manufacturers to resist the temptation of “quiet life”, when you don’t have to take any action to rewrite you software for the new architecture, when every new CPU is faster than the previous and where backward compatibility has a lifetime guarantee.

Even if Intel used the volition to break the vicious circle, there would be other companies — Intel’s competitors — that would continue to ship X86 parts to the great joy of software manufacturers. That’s an interesting case where competitiveness, so intrinsic to market economy, can, in fact, make the matters worse.

Therefore, in my opinion, the X86 architecture with its guaranteed backward compatibility more hindered the innovations in software than encouraged them.

Supporting two competing architectures is difficult even for big companies. Here is how Dr. Andy Grove, then CEO of Intel, described the situation of 1989:

We now had two very powerful chips that we were introducing at just about the same time: the 486, largely based on CISC technology and compatible with all the PC software, and the i860, based on RISC technology, which was very fast but compatible with nothing. We didn’t know what to do. So we introduced both, figuring we’d let the marketplace decide. However, things were not that simple. Supporting a microprocessor architecture with all the necessary computer-related products – software, sales, and technical support – takes enormous resources. Even a company like Intel had to strain to do an adequate job with just one architecture. And now we had two different and competing efforts, each demanding more and more internal resources. Development projects have a tendency to want to grow like the proverbial mustard seed. The fight for resources and for marketing attention (for example, when meeting with the customer, which processor should we highlight) led to internal debates that were fierce enough to tear apart our microprocessor organization. Meanwhile, our equivocation caused our customers to wonder what Intel really stood for, the 486 or i860?

From the book “Only the paranoid survive”, quotation taken from here.

Wikipedia even says that Microsoft developed for this i860 chip the operating system that would later become Windows NT. So, it was a great situation to drop X86 and start afresh. But Intel “let the marketplace decide”. The market is the collective unconscious, and people unconsciously try to avoid risks by all means possible. Why risk with the new architecture if the old one is stably getting faster every year?

That’s right, the move from X86 to i860 might not have provided new levels of efficiency by itself. Hardware advances were tremendous in X86 in the recent years. However, they were skewed to please single-core workloads.

We must create an environment where the total recompilation of all software is seen not as a problem but rather as an opportunity. I remember the old days when I recompiled the FreeBSD kernel to better suit capabilities of the X86 CPU I had in my server, and then installed application software by compiling it from sources. Gentoo Linux also follows the same path of “total compilation”.

Then, if we have this infrastructure for “total compilation” available, we won’t fear the advent of the new architecture, for we would be well prepared to it. With the new compiler (shipped along with the new CPUs), software vendors would, in a matter of hours, recompile their products, be it “Microsoft Windows” or “Mozilla Firefox”.

Interestingly, the server market is more susceptible to this kind of change than the desktop market, because for the Linux and UNIX systems widely employed there recompilation simply works, out of the box. But this market is under the influence of “psychological inertia”: systems administrators are so concerned with installing “approved”, “tested” and “supported” versions of operating systems that the simple thought of recompiling the operating systems and applications would strike fear into their hearts. This situation must be changed by improving the technology so that the custom compiled versions become as robust as their traditional “approved and tested” alternatives. (By the way, the HPC people are more progressive in this respect, because they routinely recompile their applications, and generally are not afraid of frequent changes).

Hence, the server market is very conservative. I believe the “recompilation revolution” will come from the general computing community, and most probably from the mobile segment. If manufacturers of the software that we run day to day would have the necessary means to recompile it easily, they would just as easily welcome the advent of the new CPU architecture. After upgrading your hardware and reinstalling (upgrading?) the operating system, you even wouldn’t note the difference! (OK, I leave out the device drivers that must be created beforehand).

In the mobile world, CPUs come and go, so developers often design their software to be portable between architectures. Also, Debian Linux successfully runs (with all applications, including Mozilla Firefox and OpenOffice.org) on the “ARM Cortex-A8” CPU, which is drastically different from the X86 family — no need to say more, the complete recompilation is possible.

That’s how the acceptance of the new CPU architecture might look like. But, of course, the compiler must be very well tested for that change to be successful.

Now you may ask — how it all relates to HPC? This is easy. When the new architecture conquers the world, the CPUs will be produced at mass scale, therefore they would be very favourable for use in HPC clusters. And we will use them. But first we need to make sure that they were designed with our specific HPC considerations in mind.

For example, HPC applications need lots of memory bandwidth. If we currently have one memory controller per CPU die, maybe we need more? And of course we always need huge amounts of IO bandwidth. Think of Bluegene/L, where every node has just two cores, but is connected with a 3D torus network to 6 neighbours. All six links give the bandwidth of 1,05 Gigabytes/sec for the two cores — that is, about 500 Megabytes/sec per core. That’s the bandwidth! Current Beowulf-style compute nodes, with ever-increasing core counts, are clearly lacking adequate network bandwidth.

In the end: the new architecture will eventually come. To allow for the smooth transition, we first need a software revolution. Software will become multicore-friendly, and will be easily portable to different architectures. This change might be introduced by the general computing community, and not necessarily by the server market or HPC. The resulting architecture will be also usable for HPC purposes if we clearly specify what we want it to include.

(Update: In June 2013 we came up with this proposal: “Many-core and InfiniBand: Making Your Own CPUs Gives You Independence”)

I hear your call to “think parallel”, my friend. Oh, I do.

Count me on the choir.